TABLE OF CONTENTS

Most professionals lean towards Nvidia graphics cards whenever there’s work involved. Although many workstation applications still work better with Nvidia GPUs, AMD is slowly but surely catching up in this race.

One such (recent) example is Maxon finally announcing support for AMD Radeon Pro GPUs on Windows.

Until now, access to Maxon’s Redshift GPU render engine was limited to only Nvidia GPUs (unless you used a Mac and could deal with a Beta release).

With AMD showing that they’re finally ready to wrestle Nvidia for that performance crown, adoption is on the rise.

But is the professional market ready for them yet?

Honestly, I’m not sure. And ‘not being sure’ is something professionals, whose livelihoods depend on their workstations, can’t afford to risk.

Let’s dive a bit deeper and look into how these two GPU behemoths battle it out in 2022! If you’re here for a quick recommendation for some common workloads, here you go:

| Category/Task | No-Compromise Performance | CG Director Best Value | Budget Recommendation |

|---|---|---|---|

| Video Editing / Encoding | RTX 3080 / RTX3090 Ti | RTX 3070 Ti | RTX 3060 Ti |

| Viewports & 3D Modeling | RTX 3080 Ti | RTX 3070 | RTX 3050 |

| GPU Rendering | RTX 3090* | RTX 3080 | RTX 3060 Ti |

| CAD / CAM | RTX 3090 | RTX 3070 | RTX 3060 |

*if you want to add multiple GPUs, 3080 is a better option

AMD Radeon vs. Nvidia GeForce: A Quick History Recap

ATI vs. Nvidia vs. 3dfx: 3 Competitors, 1 Crown

Both AMD and Nvidia have been pushing the envelope when it comes to computing and graphics for years now.

I’d go far as saying that without the intense competition between ATI and Nvidia in those early years, we wouldn’t be where we are today.

But back then, both Nvidia and ATI had another competitor to contend with – 3dfx Interactive, based out of San Jose, California.

3dfx Interactive was a well-known name in those days because it pretty much pioneered modern 3D graphics processing units and the concept of video cards in the 90s.

Sadly, in the late 90s, things went sideways for the company. 3dfx just struggled to keep up with Nvidia and ATI’s performance crown-grabbing frenzy and eventually sold most of its assets to Nvidia in 2002; it filed for bankruptcy later that same year.

ATI Radeon and NVidia GeForce

While the Nvidia GeForce name popped up in 1999, ATI’s ‘Radeon’ brand didn’t show up until 2000 when ATI launched the Radeon DDR – ATI’s first fully DirectX 7 compliant graphics card.

Radeon DDR Graphics Card and GeForce 2 GTS (Yes, dinosaurs on graphics card boxes were a thing back then, and it was cool. No one can convince the teenager inside me otherwise.)

At the time, Nvidia had launched the second generation of GeForce GPUs – the GeForce 2 series.

This family of graphics cards offered several products like the GeForce 2 GTS, the GeForce 2 Pro, GeForce 2 Ultra, and the GeForce 2 Ti.

Quake 3 Arena Benchmark

Top-tier 2nd Gen Nvidia GPUs topped the charts, with the ATI Radeon DDR and the 3dfx Voodoo5 barely keeping up with even Nvidia’s first-generation GeForce 256 GPU.

This performance gap became somewhat of a tradition, with Nvidia managing to stay a step ahead of ATI when it came to top-tier performance – forcing the competition to cut profit margins and offer better value products to remain in the game.

AMD went on to acquire ATI in 2006 for a cool $5.4 billion. Although many financial analysts questioned the move at the time, AMD has gone on to leverage its Radeon arm quite well over the years.

AMD Radeon vs. Nvidia GeForce in the Late 2000s

Nvidia maintained a tight grasp on its performance crown for the longest time. Of course, this doesn’t mean that Radeon always played catch-up to Nvidia.

Let’s take a trip back to Jan 2009.

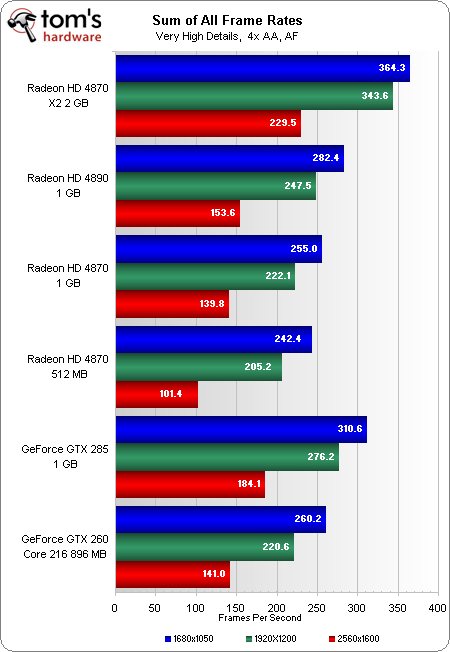

Nvidia had just introduced the GeForce GTX 200 series to take on AMD’s Radeon HD 4000 parts, which launched mid-2008.

Take a look at how they stacked up against each other!

Source: Tom’s Hardware

Radeon HD 4870X2 Benchmark summary

AMD’s commitment to winning the crown after Nvidia embarrassed its Radeon HD 3870 X2 with the legendary GeForce 9800 GTX is palpable with this generation.

The Radeon team did everything in its power to wrest back the crown from Nvidia, and it was successful (in a way)!

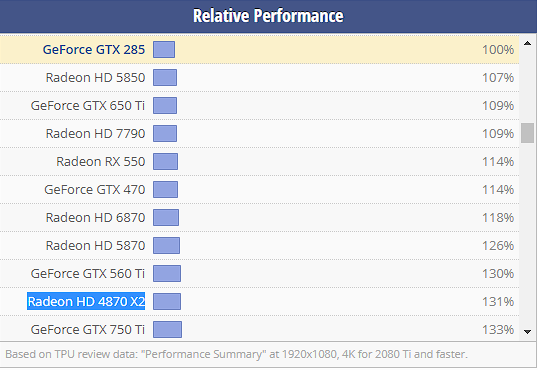

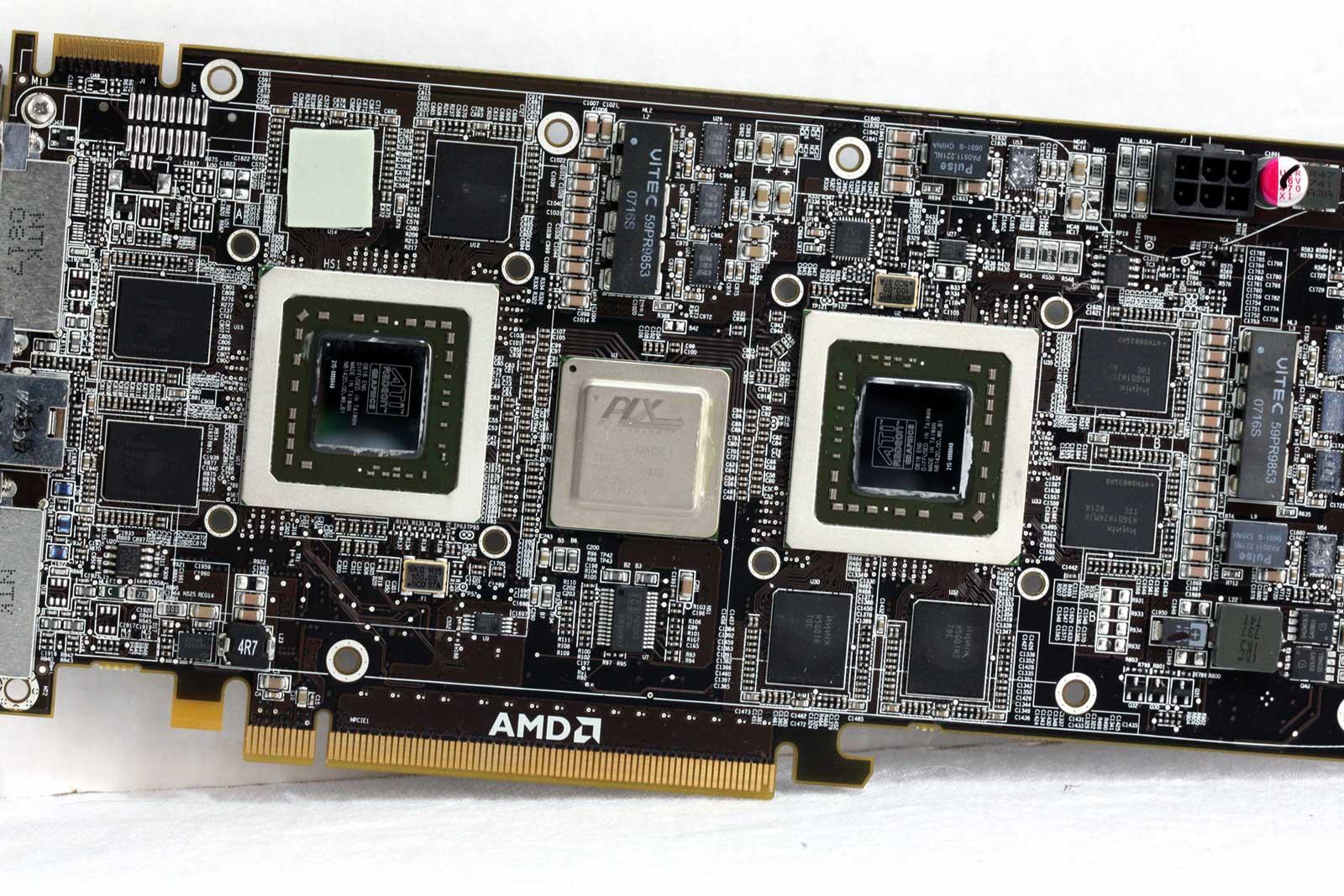

Source: Wikimedia Commons

Source: Guru3D

Not only did it completely eclipse every offering from Nvidia’s GeForce 9000 lineup, but its top performers also managed to go toe-to-toe even with Nvidia’s GeForce 200 series of GPUs that launched two quarters later.

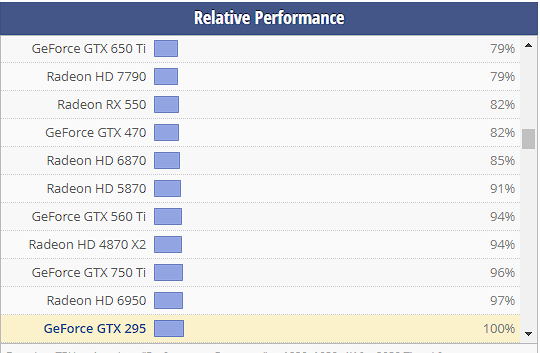

However, AMD quickly lost the crown to Nvidia once more as the latter managed to eke out a slim victory with its GeForce GTX 295 in early 2009.

That said, many contended that Radeon’s approach of ‘gluing’ 2 GPUs onto a single board wasn’t as good as a single powerful GPU (with good reason).

GeForce GTX 295 Performance

On a sidenote – TechPowerUp’s GPU database is a boon to hardware nerds like me, and huge props to them for maintaining and updating it for so long!

AMD vs. Nvidia in the Workstation/Professional Market

Graphics processing has come a long way since the days of Voodoo cards. But the companies in the ring (for desktop PCs) remain nearly the same. Yes, AMD acquired ATI, but otherwise, there’s no change in competition.

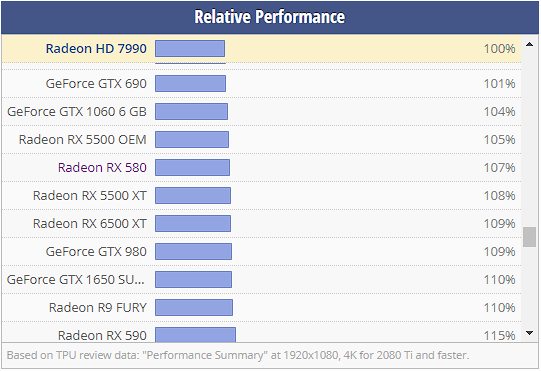

Besides Radeon’s lean period, i.e., from 2014 (after the Radeon HD 7000 series) to around 2019 (until the launch of the RX 5000 series), AMD and Nvidia have generally managed to compete against each other pretty evenly in the gaming space.

However, AMD’s lean years were…LEAN. Here’s an interesting tidbit: AMD couldn’t beat the performance level of its own Radeon HD 7990 graphics card by much for over four years!

Radeon HD 7990 Performance

For reference, the Radeon HD 7990 was AMD’s top-of-the-line offering back in 2013. And the Radeon RX 590 was its best product until the launch of RX Vega in mid-2017!

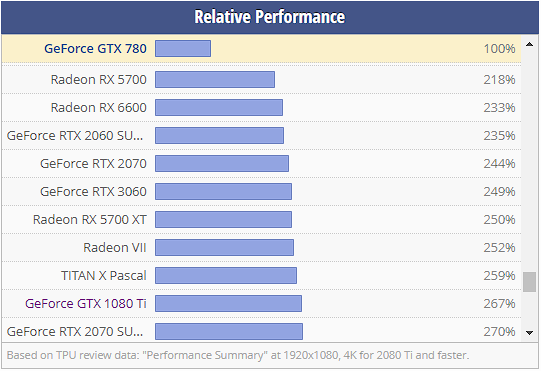

A 15% uplift in graphics performance over 4 years is horrendous. It looks even worse when Nvidia was busy obliterating its own GPU records – offering a whopping 267% improvement in performance during that time frame.

Nvidia GTX 780 Performance

That said, the performance disparity was only one factor in the widespread adoption of Nvidia cards in professional apps.

Addressing the CUDA in the Room

CUDA, or Compute Unified Device Architecture, is a powerful proprietary API from Nvidia that lets developers effectively execute parallel tasks on Nvidia graphics chips.

It focuses on parallelizing operations and is perfect for tasks that can be broken down into smaller sub-tasks to be handled concurrently.

GPU rendering is one great example.

The attention Nvidia heaped on the professional space was instrumental in developing an ecosystem of CUDA-accelerated pro apps.

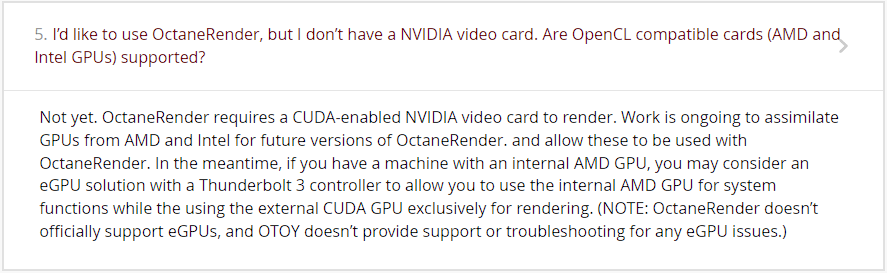

Unfortunately, AMD just got left behind during this time. Notes like this one from OctaneRender in many professional applications were quite prevalent –

Required CUDA Support for Octane Render GPU Engine

While Nvidia developed its proprietary CUDA platform, AMD chose to rely on OpenCL – an open framework for writing programs that can execute across any processing unit, including Central Processing Units, Graphics Processing Units, Field-Programmable Gate Arrays, etc.

However, AMD’s lackluster performance and some complications in developing for OpenCL meant that professional apps heavily favored CUDA rather than OpenCL.

So, Nvidia not only completely eclipsed Radeon GPUs in performance, but it also invested in encouraging an ecosystem of CUDA-accelerated pro applications.

This combination of a robust ecosystem and raw performance made Nvidia the GPU of choice for ANY professional task for over half a decade.

Sprinkle in Some Optix for Good Measure

Nvidia wasn’t done with just CUDA, though.

Optix is a proprietary framework from Nvidia that allows optimal ray tracing performance on any Nvidia graphics card. Think of it as a sibling to CUDA that aimed to accelerate ray tracing calculations, whereas CUDA was focused on compute.

By the way, did you know that Nvidia first launched Optix well over a decade ago? Well, they did – in 2009.

However, the GPUs at the time weren’t even particularly adept at rasterization (by modern standards), let alone being able to handle ray traced scenes effectively. Little did we know that it would take nearly a decade for it to happen.

(Here’s a link to the modern Nvidia Optix page if you want to find out more)

Then came Nvidia’s RTX Series (with its less cool full-form – Nvidia Ray Tracing Texel eXtreme) with dedicated Ray Tracing cores.

When they launched, the market scrambled to make sense of the new RTX graphics cards.

Gamers got busy figuring out whether a ray-traced game looked better than its regular counterpart. At the same time, professionals wondered whether this technology could help them work faster.

The answer to the first question in most cases is ‘sure, if you look close enough,’ but the answer to the second question turned out to be – ‘wow, definitely yes.’

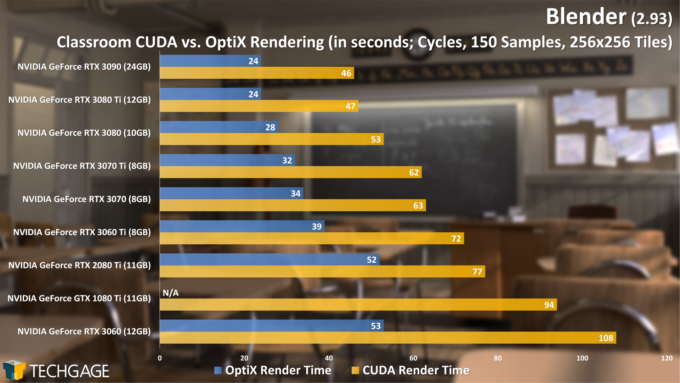

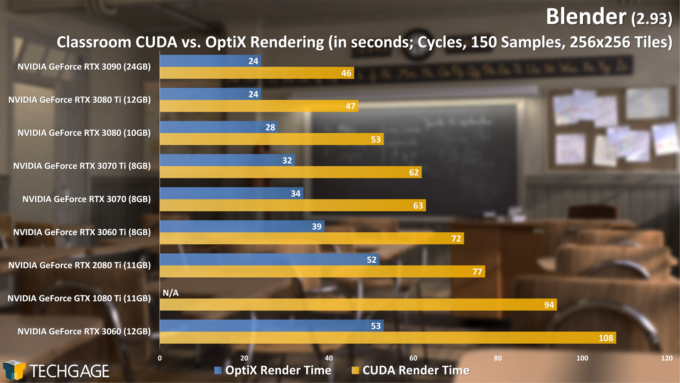

Here’s an example of what I’m talking about (using Blender’s Cycles Renderer).

Blender 2.93 – Cycles OptiX Render Performance (Classroom) (June 2021)

While the RTX cards can leverage Nvidia’s Optix RT engine, that poor GTX 1080 Ti (a rendering powerhouse of its time) is left with only CUDA acceleration.

The difference? Well, the chart tells it best.

When you look at the CUDA render times, an RTX 3060 is no match for the GTX 1080 Ti.

However, add Optix into the equation, and suddenly the GeForce RTX 3060 now obliterates the once-mighty GTX 1080 Ti by cutting render times in half!

You’ll find a similar story unfolding across the board when it comes to CUDA vs. Optix.

AMD Radeon vs. Nvidia GeForce in 2024

Even we at CGDirector, have always exclusively recommended Nvidia graphics cards for all GPU-heavy Pro workloads.

They offered the broadest application support, excellent performance, and relatively stable drivers – a trifecta for workstations.

Have things changed in 2024? Let’s look at a few benchmarks and find out!

Nvidia vs. AMD Benchmarks for GPU Rendering

Redshift

Redshift is one of the world’s most popular GPU render engines, and Maxon (the creators of Cinema 4D) recently acquired and brought it under their suite of creative apps.

Until last year, Redshift offered only CUDA support, which means it worked only with Nvidia GPUs.

Things changed this year due to a couple of reasons. One is Apple’s push for Radeon on the Mac platform; the second reason is just AMD’s performance uplift after years of lagging behind Nvidia.

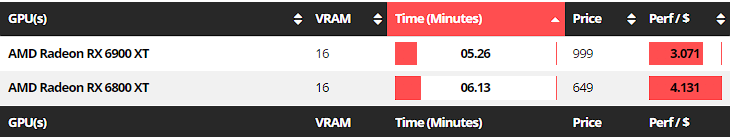

Redshift GPU Rendering Performance on AMD GPUs

Yes, the AMD numbers look awful if you compare the performance between Nvidia and AMD on Redshift.

Redshift GPU Rendering Performance on Nvidia GPUs

However, before I dissect this, it’s important to note that the Radeon numbers are from early beta versions of C4D and Redshift on MacOS.

The performance is basically what you get now, but things might improve as drivers and applications add optimization for Radeon cards.

For now, let’s circle back to the performance here.

A single $350 (MSRP) RTX 3060 comes just 10 seconds behind a single $999 Radeon RX 6900 XT.

Sadly, it’s not even close, and it’s not worth going AMD if you depend on Redshift as your primary GPU renderer.

Verdict: Go Nvidia. AMD support isn’t great at the moment.

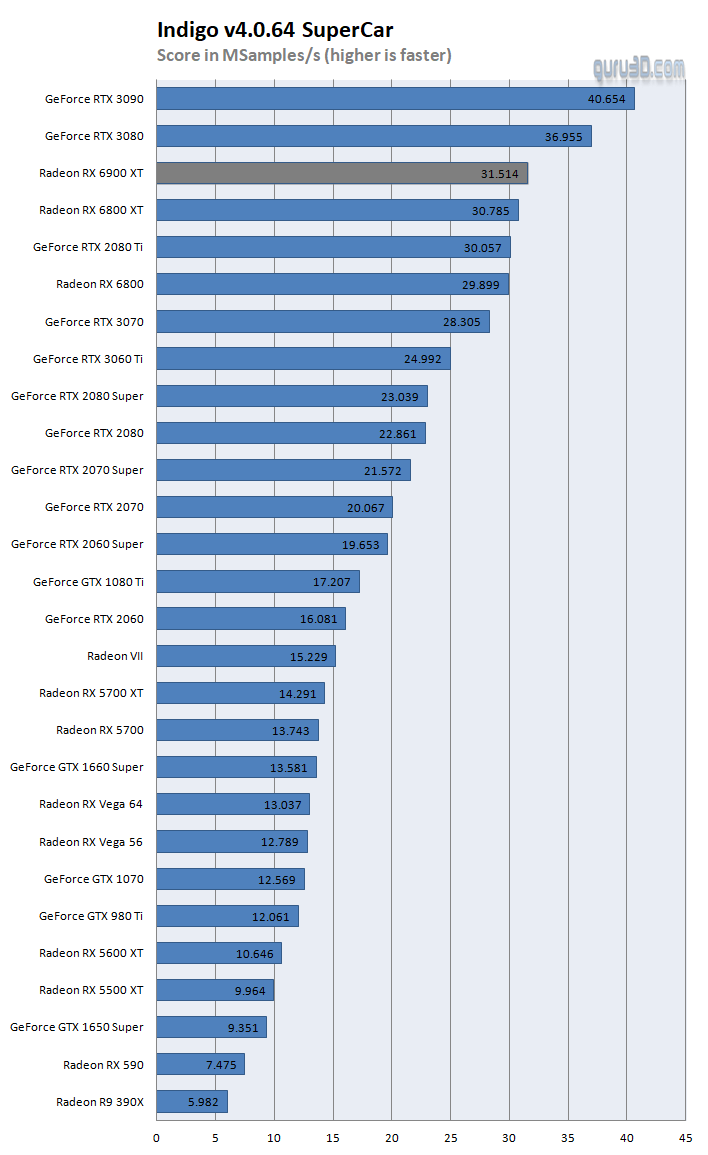

Indigo Renderer

Indigo Renderer defines itself as an ‘OpenCL-based GPU engine to offer industry-leading performance on Nvidia and AMD graphics cards.’

Since AMD relies on OpenCL, things should be quite a bit better for Team Red, right?

Indigo Render Benchmark Chart

Yes, it is (somewhat).

AMD manages to claw back some lost ground when using Indigo Renderer for GPU rendering, but its showing is far from impressive.

Nvidia completely obliterates the top-tier Radeon RX 6900 XT with its GeForce RTX 3090.

Even in the mainstream offerings from both companies, Nvidia offers the better product with its GeForce RTX 3080 outperforming a similarly-priced Radeon RX 6800 XT.

Verdict: Go Nvidia, but AMD isn’t bad.

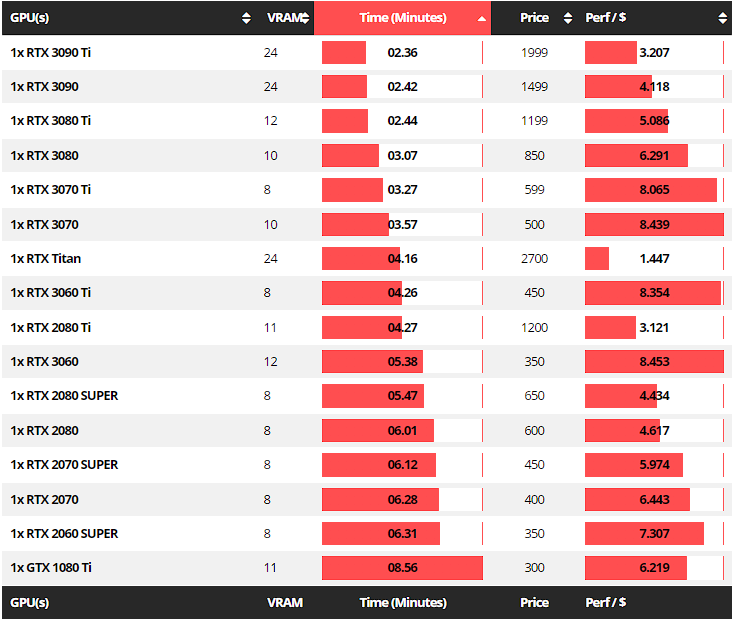

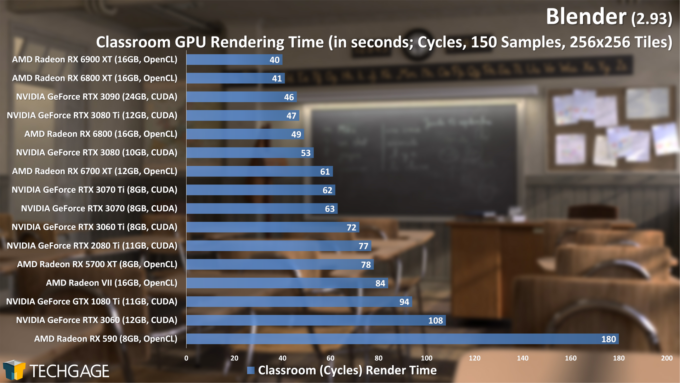

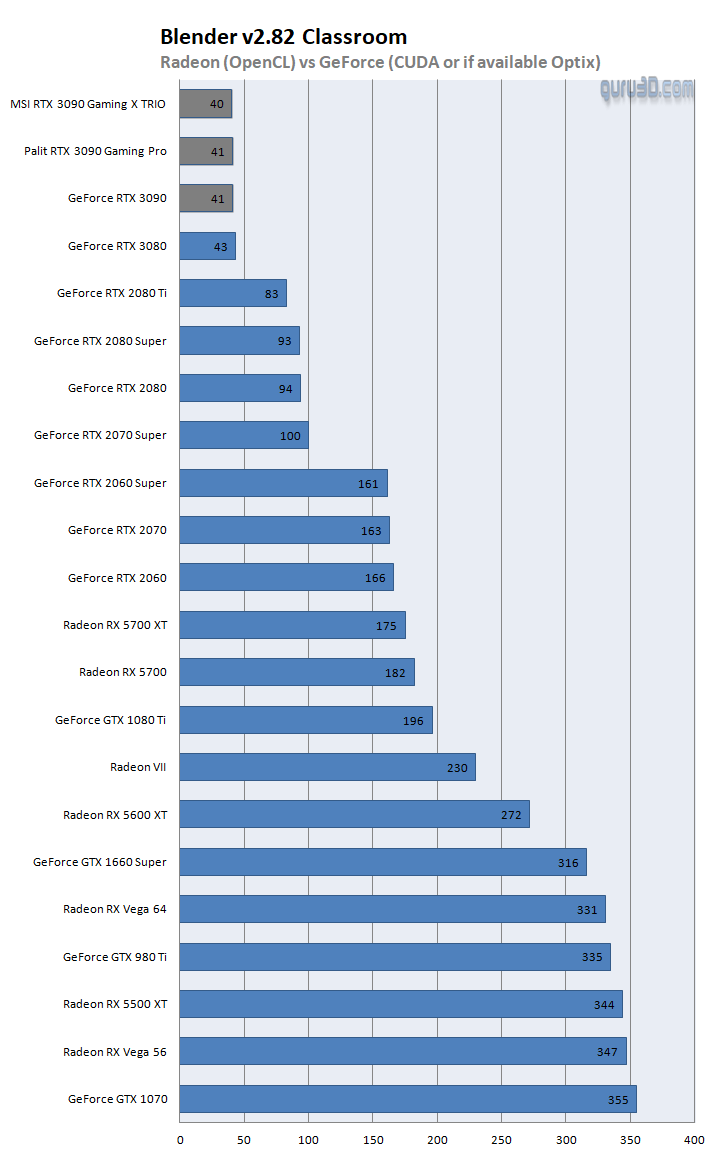

Blender Classroom GPU Render (OpenCL / CUDA / Optix)

Before even looking at the results, I knew this one would be painful for AMD.

The Nvidia Optix Ray Tracing engine offers impressive performance in professional apps that leverage its ray tracing acceleration. Just looking at the mile-long laundry list of partners on their page should be clue enough:

Nvidia OptiX Partners

Blender’s Cycles Renderer can now leverage Optix when using RTX graphics cards – making it much faster than CUDA or OpenCL.

Now, with that out of the way, let’s see how Radeon (using OpenCL) fares against Nvidia (CUDA) in the famed Blender Classroom render using Cycles, shall we?

Blender 2.93 – Cycles GPU Render Performance (Classroom) (June 2021)

AMD’s recent improvements have allowed it to catch up to Nvidia’s CUDA-accelerated performance.

But unfortunately, RT-acceleration has effectively halved render times, as you can see below:

Blender OptiX GPU Render Benchmark

Blender Classroom GPU Render Benchmark

Nvidia’s RTX 3000 lineup completely crushes Radeon when it comes to performance in GPU rendering using Cycles.

AMD’s showing here is so bad that the $400~ Nvidia RTX 3060 Ti easily beats an AMD Radeon RX 6900 XT! We’ve seen similar results with our tests, and several reviewers also report similar margins.

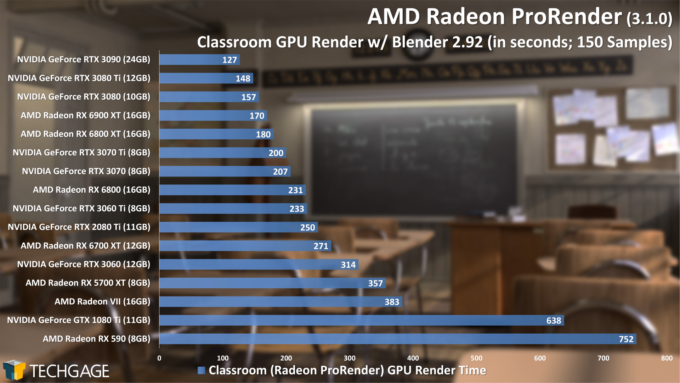

Let’s give AMD another shot, using its own Radeon ProRender for Blender.

AMD Radeon ProRender Performance – Blender Classroom Scene (June 2021)

Well, it doesn’t look too great either.

Even if we ignore that ProRender takes so much longer to complete the Classroom Render compared to Blender Cycles, Nvidia adds insult to injury by performing better than AMD.

An RTX 3080 still outpaces AMD’s best offering by a decent margin while costing less money.

Verdict: Go Nvidia. Radeon GPUs do work, but not well enough to recommend.

Nvidia vs. AMD Benchmarks for 3D Modeling

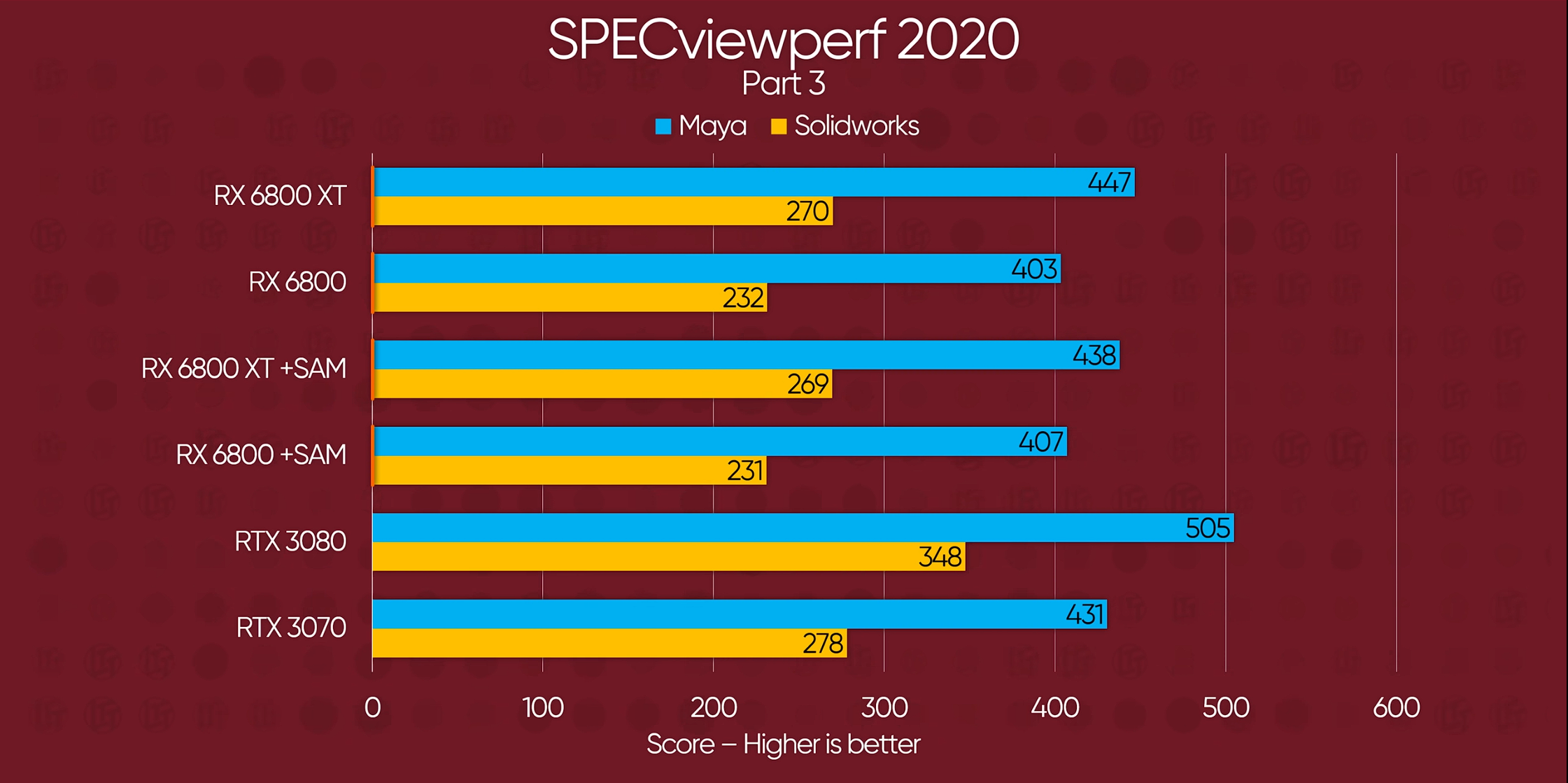

Autodesk Maya and Solidworks

Both Maya and Solidworks are well-known professional 3D modeling applications.

While Solidworks generally appeals to design professionals working on the engineering side of things, Maya is more popular among creative professionals.

Performance for AMD in Maya and Solidworks isn’t too bad. Nonetheless, Nvidia manages to best AMD without too much trouble.

Since the GeForce RTX 3070 easily keeps up with the pricier Radeon RX 6800 XT, Nvidia remains the right choice for professionals using either Maya or Solidworks.

Note – Some features like Solidworks RealView are only available on supported workstation GPUs from either AMD or Nvidia. So, you won’t be missing out on any significant feature regardless of whether you go with workstation graphics cards from Team Green or Team Red.

Verdict: Go with Nvidia. Although AMD cards perform well, Nvidia beats them in price to performance quite handily.

Workstation Benchmarks for Video Editing

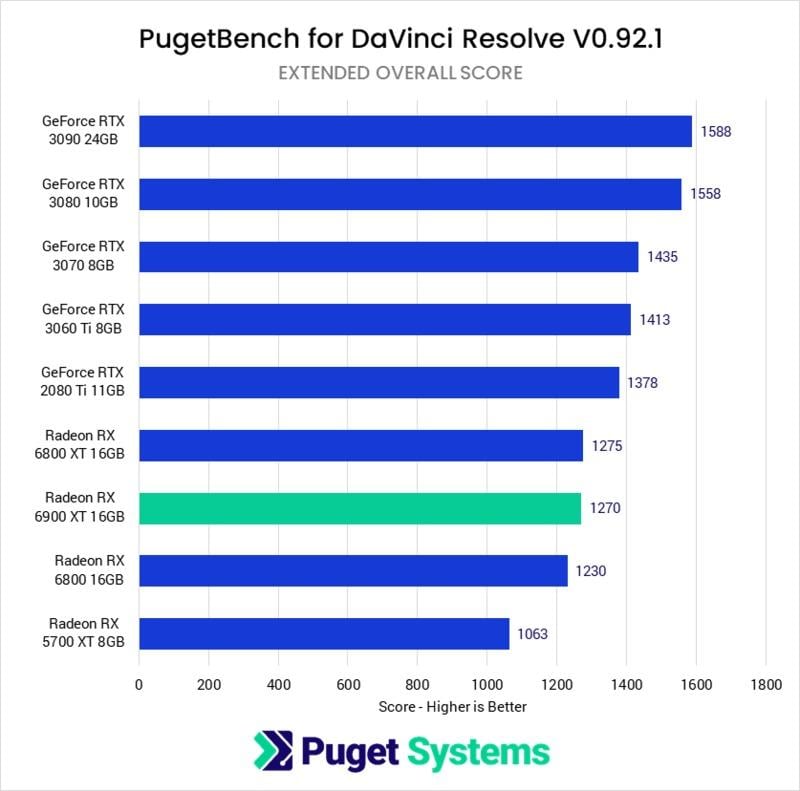

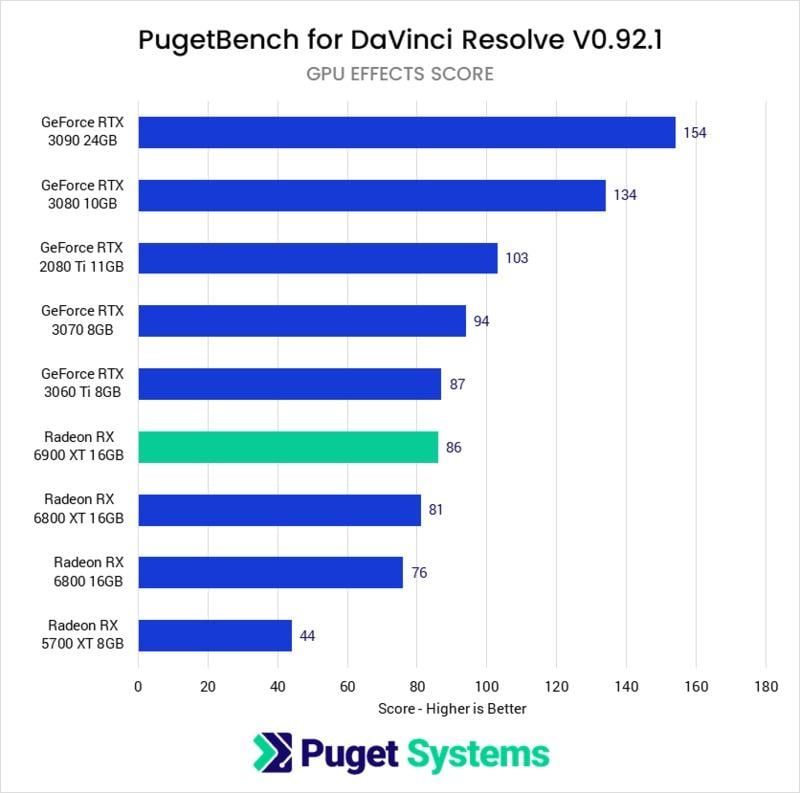

DaVinci Resolve (PugetBench)

DaVinci Resolve might not have the reach or renown of Adobe’s Premiere Pro yet, but it has become pretty well-known in the industry these past few years. It also handles multiple GPUs and CPU cores quite efficiently.

Puget Systems pit AMD Radeon’s best against Nvidia’s RTX cards to see who comes out on top. Let’s see how it looks!

Pugetbench Davinci Resolve Benchmark Comparison – GPU

Pugetbench Davinci Resolve Benchmark Comparison – GPU Effects Score

Unfortunately, AMD’s woes don’t seem to end at GPU rendering.

Even the much cheaper Nvidia GeForce RTX 3060 Ti manages to embarrass the top Radeon card by a decent margin, while the RTX 3090 and RTX 3080 are in a league of their own.

If you’re working with DaVinci Resolve, Nvidia offers way better value.

Verdict: Go Nvidia. AMD cards work, but not well.

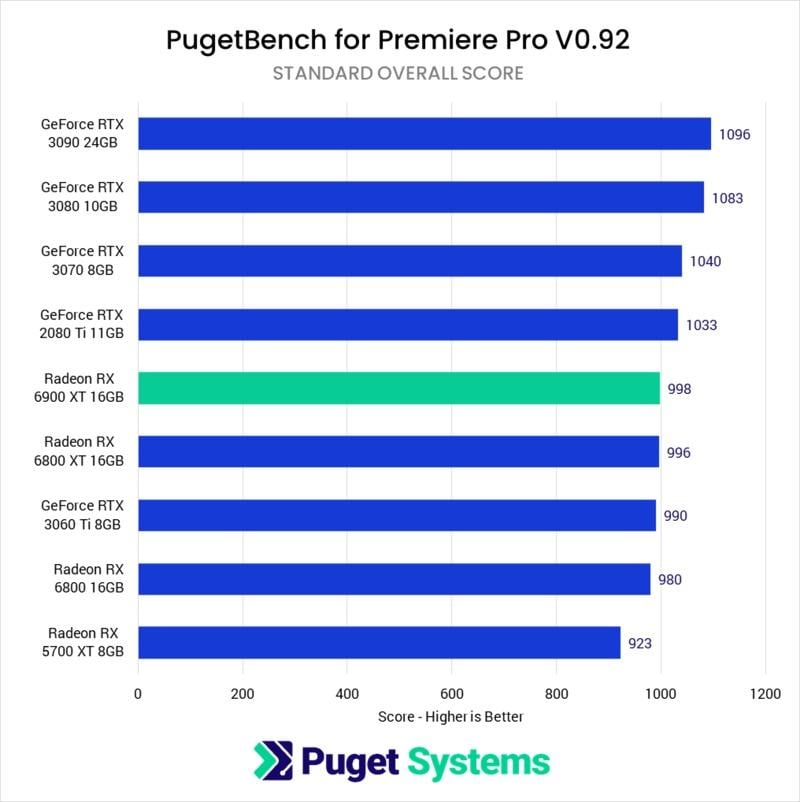

Adobe Premiere Pro 0.92 (PugetBench)

Premiere Pro is pretty much the industry standard for professional video editing. What’s more, it’s been around forever, with its first version launching way back in 2003.

Today, it is an integral part of the Adobe Creative Cloud license that boasts over 26 million paid subscribers.

Until recently, AMD cards performed quite poorly on Premiere Pro. But Adobe has pushed updates to better support Radeon GPUs over the past couple of years, improving performance.

Let’s see how AMD Radeon GPUs hold up compared to Nvidia’s RTX lineup in 2022!

Pugetbench Premiere Pro Benchmark Comparison – GPU Standard overall score

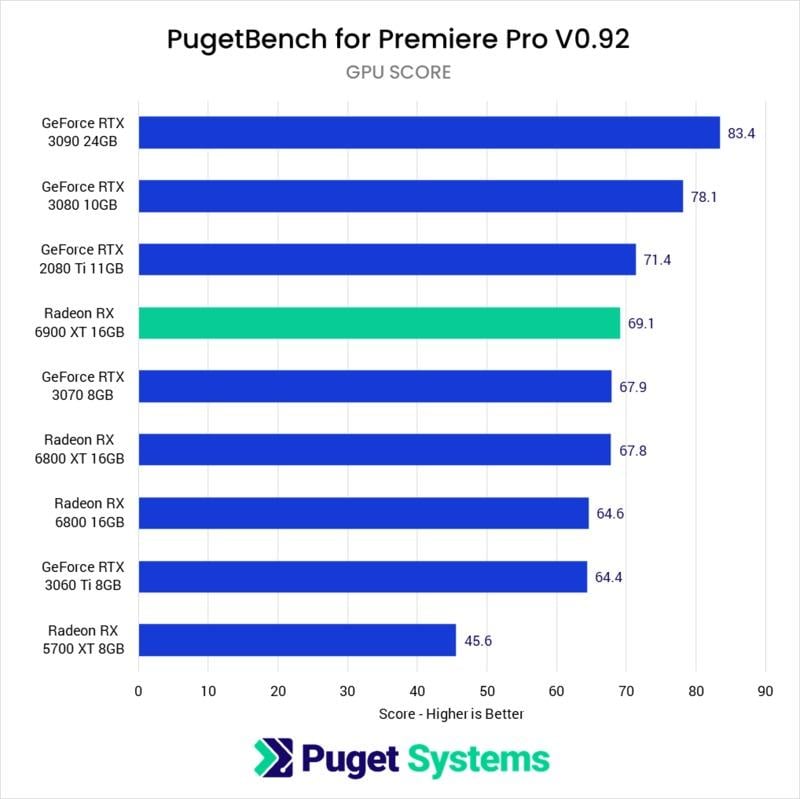

Pugetbench Premiere Pro Benchmark Comparison – GPU score

Although Radeon graphics cards fare slightly better in Premiere Pro (compared to DaVinci Resolve), Nvidia still manages to edge them out comfortably.

The much cheaper Nvidia RTX 3080 completely outclasses anything AMD offers, while the RTX 3060 Ti can easily match pricier competitors from the Radeon stable.

That said, do note that the Radeon RX 6800 XT and RX 6900 XT scores being so close to each other could potentially point to an optimization issue.

Verdict: Go Nvidia. But AMD isn’t completely horrible here.

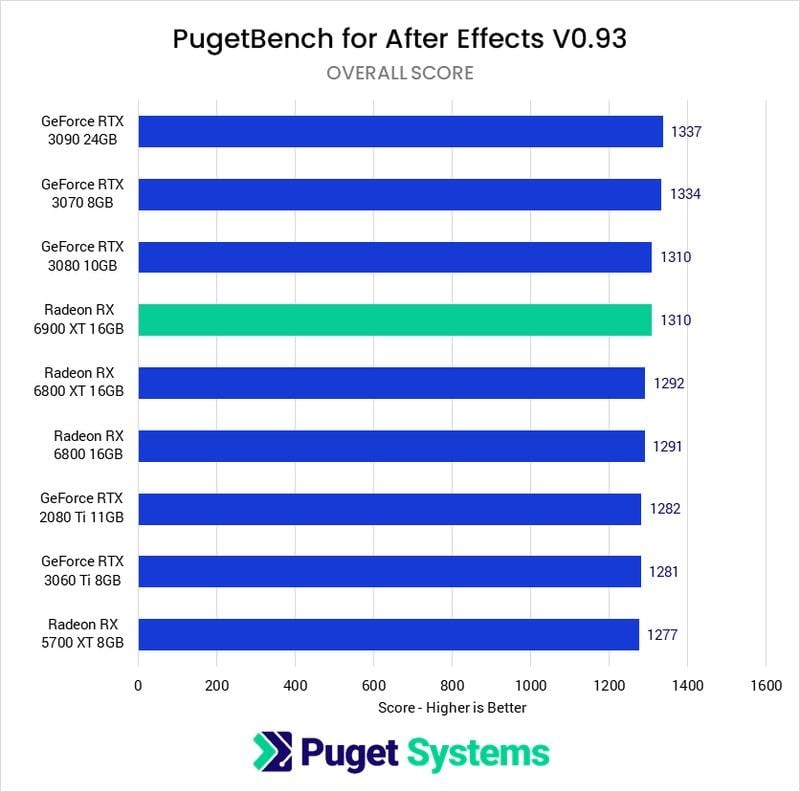

Adobe After Effects (PugetBench)

It’s not too far-fetched to say that After Effects is the industry standard for motion graphics in 2.5D or pseudo-3D.

Although it’s known for chugging down all the system RAM you can throw at it, After Effects’ GPU requirements aren’t that demanding.

Here’s a benchmark from Puget Systems:

Pugetbench After Effects Benchmark Comparison – GPU overall score

The performance is so close to each other on any modern graphics card that you get a few comical results.

For example, the 8GB GeForce RTX 3070 outpaces a 10GB GeForce RTX 3080!

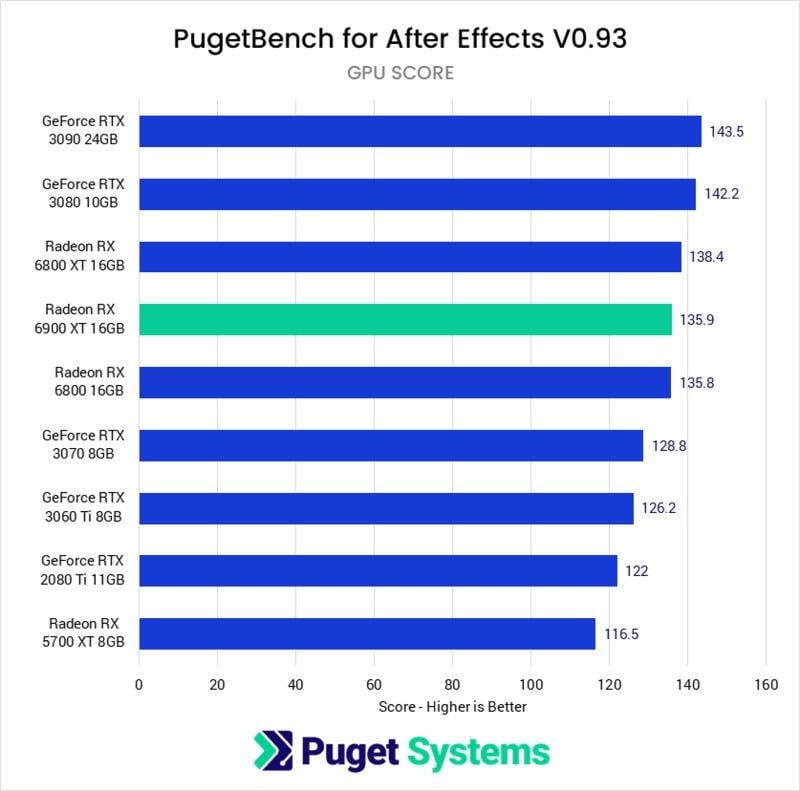

The dedicated GPU scores also tell a similar story:

Pugetbench After Effects Benchmark Comparison – GPU score

Things look quite a bit better for Team Red (finally) when competing against Nvidia for After Effects.

Admittedly, this could come down to After Effects being bottlenecked by something other than the GPU because most tasks on it aren’t too GPU-bound past a certain point.

For the most part, you can expect a similar experience with Radeon or GeForce RTX cards. However, Nvidia is still the way to go for professionals who want the best value and performance on the market right now.

Verdict: Go Nvidia or AMD. Both are similar, with Nvidia faring just a tiny touch better.

Nvidia or AMD: Which is the Best Choice for Workstations in 2022?

Although we’ve explored a variety of workloads, AMD doesn’t manage to offer value for those using Pro apps.

Granted, there are some exceptions (Indigo Renderer, for example), but it’s mostly just a train wreck of a showing for Team Red.

This is disappointing, not just for AMD, but for professionals as well. Competition in the market is always welcome, and we have become overly reliant on Nvidia to deliver the performance we need to get work done for a decade.

Don’t get me wrong, though. This isn’t a knock against Nvidia at all.

They’re designing and launching incredible products, and the performance uplift is more than welcome.

For better or worse, Nvidia continues to dominate AMD in the workstation space, and there’s just no way that we could recommend AMD outright for even a single workload we covered.

Over to You

So, what do you think about the GPU landscape in 2022? Let us know in the comments below! Or our forum!

![Can You Run Two Different GPUs in One PC? [Mixing NVIDIA and AMD GPUs] Can You Run Two Different GPUs in One PC? [Mixing NVIDIA and AMD GPUs]](https://www.cgdirector.com/wp-content/uploads/media/2023/03/Can-You-Run-Two-Different-GPUs-in-One-PC-Mixing-NVIDIA-and-AMD-GPU-Twitter-594x335.jpg)

![Best Graphics Card Brands & Manufacturers [AMD & NVIDIA] Best Graphics Card Brands & Manufacturers [AMD & NVIDIA]](https://www.cgdirector.com/wp-content/uploads/media/2021/11/Best-Graphics-Card-Brands-For-AMD-and-Nvidia-Twitter-594x335.jpg)

0 Comments